Charli D Amelio Deepfakes

The phenomenon of deepfakes has been a growing concern in the digital age, and one of its most notable targets has been the renowned social media influencer, Charli D'Amelio. Deepfakes, a portmanteau of "deep learning" and "fake," refer to manipulated media that appear authentic but are in fact synthetic or altered. This technology has been used to create hyper-realistic videos and images, often with malicious intent, and Charli D'Amelio's experience with deepfakes highlights the importance of understanding and addressing this issue.

Understanding Deepfakes: A Brief Overview

Deepfakes leverage advanced artificial intelligence techniques, particularly deep neural networks, to manipulate or synthesize media content. These networks are trained on vast datasets, enabling them to learn and mimic human features, expressions, and movements. The result is the ability to seamlessly replace one person’s face or body with another’s, often with remarkable accuracy.

The potential applications of this technology are vast. While deepfakes can be used for creative purposes, such as in film or gaming, their misuse can have serious consequences. They can be employed to spread misinformation, damage reputations, and even facilitate identity theft. The ease with which deepfakes can be created and the difficulty in detecting them make them a significant challenge for content creators, platforms, and users alike.

Charli D’Amelio: A Target of Deepfake Manipulation

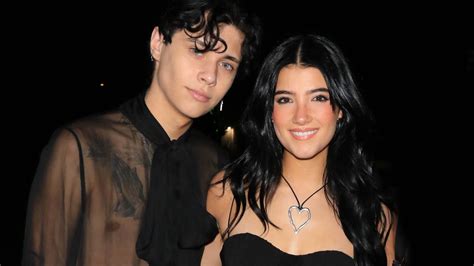

Charli D’Amelio, a social media sensation known for her dance videos and lighthearted content, has not been spared from the reach of deepfake technology. Despite her young age and relatively short career, Charli has become one of the most recognizable faces on social media platforms like TikTok and Instagram.

In 2021, reports emerged of deepfake videos featuring Charli's face superimposed onto other individuals' bodies. These videos, often sexually explicit in nature, circulated on various online platforms, including social media and underground websites. The impact of such manipulation was profound, not only for Charli but also for her fans and the broader online community.

The deepfake videos of Charli D'Amelio highlight several key issues. Firstly, they demonstrate the ease with which a person's image can be exploited and their reputation damaged. Secondly, they reveal the potential for deepfakes to invade personal privacy and cause emotional distress. Lastly, they underscore the need for better detection and mitigation strategies to combat this growing threat.

The Impact and Response: Charli’s Experience

The impact of deepfake videos on Charli D’Amelio was significant. As a young influencer, she found herself at the center of a storm, with her name and image being used in ways she never consented to. The videos not only violated her privacy but also threatened her personal safety and the trust she had built with her millions of followers.

Charli's response was twofold. Firstly, she addressed the issue publicly, using her platform to raise awareness about deepfakes and their potential dangers. She emphasized the importance of critical thinking and encouraged her followers to question the authenticity of online content. Secondly, she took legal action, working with law enforcement and technology experts to identify and remove the deepfake videos. This proactive approach set an example for other influencers and content creators facing similar challenges.

Addressing the Deepfake Threat: Industry and User Efforts

The deepfake phenomenon has spurred a range of responses from industry leaders, technology developers, and content platforms.

Industry Initiatives

Tech companies and researchers are actively developing tools and techniques to detect and mitigate deepfakes. These efforts include the creation of datasets specifically designed to train deepfake detection models, the development of advanced algorithms to identify manipulated content, and the exploration of blockchain technologies to verify media authenticity.

Furthermore, there is a growing focus on ethical guidelines and regulations to govern the use of deepfake technology. Organizations like the Partnership on AI are working to establish principles that encourage responsible innovation while mitigating potential harms. These initiatives aim to strike a balance between fostering technological advancement and protecting individual rights and privacy.

Platform Policies and User Education

Social media platforms, recognizing the severity of the deepfake issue, have implemented policies to combat the spread of manipulated content. These policies often involve content moderation teams, automated detection systems, and user reporting mechanisms. Platforms also provide educational resources to help users identify and report deepfakes, promoting a collective effort to maintain the integrity of online spaces.

User Vigilance and Critical Thinking

Ultimately, the battle against deepfakes requires a combination of technological advancements and user vigilance. Users must be educated to recognize the signs of manipulated content and to approach online media with a critical eye. This includes being cautious of unusual or sensationalistic content, verifying information from multiple sources, and supporting initiatives that promote digital literacy and online safety.

The Future of Deepfakes: Potential and Challenges

The future of deepfakes is both promising and fraught with challenges. On one hand, the technology has the potential to revolutionize various industries, from entertainment to education. It can enhance special effects in movies, enable more immersive gaming experiences, and even assist in medical training simulations. On the other hand, the misuse of deepfakes remains a significant concern, with the potential to exacerbate existing social and political issues.

As deepfake technology continues to evolve, so too must the strategies to detect and combat it. This includes ongoing research and development in AI and machine learning, as well as a commitment to ethical practices and responsible innovation. The key to mitigating the risks associated with deepfakes lies in a collaborative effort between technology developers, industry leaders, policymakers, and users.

Conclusion: Navigating the Deepfake Landscape

The case of Charli D’Amelio and deepfakes underscores the critical need for awareness, education, and proactive measures in the face of emerging technologies. While deepfakes present both opportunities and challenges, the emphasis must be on responsible development and use. As we move forward, it is essential to strike a balance between embracing technological advancements and safeguarding individual rights, privacy, and the integrity of online spaces.

How can individuals protect themselves from deepfake manipulation?

+Individuals can take several steps to protect themselves from deepfake manipulation. Firstly, maintain a strong online presence and be proactive in monitoring and securing personal information. Secondly, be cautious of unsolicited messages or requests, especially those asking for personal details or financial information. Thirdly, stay informed about the latest deepfake detection technologies and use reputable tools to verify the authenticity of media content. Lastly, report any suspected deepfakes to the appropriate platforms or authorities.

What are some potential solutions to combat the spread of deepfakes?

+To combat the spread of deepfakes, a multi-pronged approach is necessary. This includes developing advanced detection technologies, implementing robust content moderation policies on social media platforms, and promoting digital literacy and media literacy education. Additionally, fostering collaboration between tech companies, researchers, and policymakers can lead to the development of effective strategies and regulations to mitigate the impact of deepfakes.

How can we ensure that deepfake technology is used ethically and responsibly?

+Ensuring the ethical and responsible use of deepfake technology requires a combination of regulatory measures, industry guidelines, and public awareness. Governments and regulatory bodies can implement laws and policies that govern the development and use of deepfakes, holding developers and users accountable for any malicious or harmful activities. Tech companies can establish internal guidelines and best practices to promote responsible innovation. Finally, public education campaigns can help raise awareness about the potential risks and ethical considerations associated with deepfake technology.